Ignacio Martinez-Alpiste, Jose M. Alcaraz Calero, Qi Wang, Gelayol Golcarenarenji, Enrique Chirivella-Perez and Pablo Salva-Garcia, University of the West of Scotland, United Kingdom

Published: 15 Oct 2020

CTN Issue: October 2020

A note from the editors:

Seamless connectivity for patients, first responders and health care professionals including medical centres is becoming an important aspect of the quality of emergency medical care. 5G networks and related technologies can significantly improve “care on the go”, assisting first responders and health care service providers by enabling guaranteed wireless connectivity and real time multimedia communications with hospitals in advance of patient arrival. In this article, Ignacio, Jose, Qi, Gelayol, Enrique and Pablo consider the use of network slicing as one of the key elements enabling high quality real time multimedia communication between emergency medical personnel and hospitals. They present a network slicing architecture, and its implementation, as part of their 5G trials for the European project SliceNet, and discuss technical requirements for real time video or information transmission. They also argue that such shared but highly reliable connectivity could make ambulances more intelligent, further enabling emerging tools such as machine learning or artificial intelligence to further improve real time services.

Turn that flashing light on and deliver your feedback and comments.

Enjoy.

Muhammad Zeeshan Shakir, Editor

Alan Gatherer, CTN Editor-in-Chief

5G-Based Smart Ambulance: The Future of the Emergency Service in the Global Pandemic and Beyond

Introduction

Smart Ambulances empowered by the next-generation (5G) mobile networking technologies and machine learning-based Artificial Intelligence (AI) will greatly facilitate emergency management services. A 5G-connected autonomous eHealth ambulance can act as a high-speed mobile connection for the emergency medical force. Not only the ambulance but also the paramedic staff will be equipped with audio-visual sensors such as head cameras to capture the environment and the physical appearance of the patient (or victim). In addition, the patient will be fully monitored with wearables to check their vital signs. The information acquired is delivered to the awaiting accident and emergency department team at the destination hospital. These communications could optionally be shared with other specialists out of the hospital to help timely diagnosis and treatment on the route, especially in the constrained medical service situation due to the COVID-19 pandemic. 5G network slicing technologies guarantee the quality for the transmission of onboard real-time streaming of patients' medical conditions to the awaiting hospitals and emergency centers. Moreover, the 5G Smart Ambulance is equipped with an AI core that leverages front cameras to study the road through the recognition of possible obstacles and thus react and maintain a swift but constant speed to create a controlled environment to allow secure patient treatments. The study of the road condition will also assist other ambulances to change their routes determined by the current circumstances of the road.

In the following, two key enablers for the Smart Ambulance platform are highlighted. One is network slicing for end-to-end communication quality assurance for this mission-critical eHealth service especially video streaming with video optimizer Virtual Network Functions

(VNFs). The other is detection of small-sized obstacles on the road from far distances and thus improves road safety to keep fast but stable speed assisting also in cooperative driving between smart ambulances.

Assured eHealth Services with Network Slicing

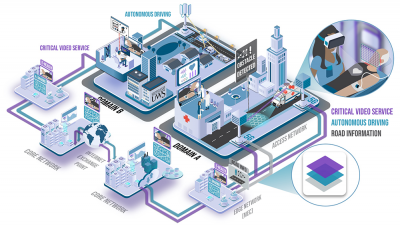

Figure 1 illustrates a 5G-connected Smart Ambulance use case. The network creates three main network slices for delivering multimedia ambulance information across multiple domains. In this scenario, both the Smart Ambulance and the destination hospital are located in domain A whereas a specialist is in domain B e.g., working at home. The network slicing technology establishes reliable and real-time low-latency transmissions to the medical specialist to assist the onboard medical team remotely. It needs to meet stringent requirements to achieve assured mission-critical communication services on the move. Heighted requirements may include guaranteed Quality of Service (QoS) for all communications, preserved Quality of Experience (QoE) or perceived quality for video streaming services, and large-area continuous service coverage potentially across multiple network service domains. These requirements can be primarily addressed by advanced network slicing technologies, featured in recent projects. This article focuses on the EU 5G-PPP SliceNet project [1][2], which has tackled the challenges in network slicing and helped satisfy the Smart Ambulance requirements [3]. Network slicing allows creation of multiple independent logical networks with different network service characteristics over the same physical network. As shown in Figure 2, SliceNet takes a layered architectural approach to achieve advanced Multi-role, Multi-level and Multi-domain Network Slicing, explained as follows.

Firstly, SliceNet supports multiple business roles for network slicing based services, including 4G/5G network infrastructure owners and operators, virtual mobile operators over the same physical infrastructure or Network Service Providers over a particular geographical area, and Digital Service Provider (DSP) to coordinate different Network Service Providers (NSP) for federated network slice based services across various service providers.

Secondly, SliceNet enables large-scale end-to-end (E2E) network slicing across different administrative domains, e.g., managed or owned by different NSPs. In each of these domains, SliceNet performs network slicing over both Radio Access Network (RAN) and Core Network. To meet large-scale service coverage, different operators can be enabled to share their infrastructures across multiple network service domains, through either a peer-to-peer approach or a coordinated approach e.g., via a Digital Service Provider. By ensuring the

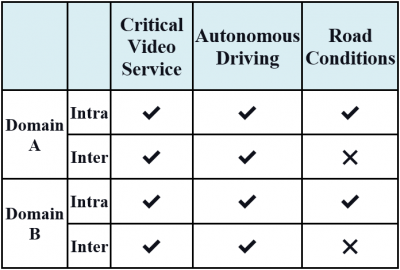

service quality, Smart Ambulances can keep critical communications with medical and autonomous driving control centers in real time. These communications are crucial when a patient is being monitored for medical conditions such as coronavirus-related pneumonia, heart attack, stroke, etc. within the ambulance on its way to the hospital. In addition, it is worth noting that not all the services within the Smart Ambulance use case would require multi-domain network slicing, which may differ according to the specific service subset. In an intra-domain offering, the E2E network slicing takes place in one domain, as a case in point, the ambulance and the hospital being in the same service domain. In an inter-domain offering, E2E network slicing involves more than one domain. As shown in Table 1, autonomous driving and video streaming have different requirements in service domains. As specialists may be located in different domains, the video service and vital signs information will be transmitted over an inter-domain slice. By contrast, the information captured from the AI core is just transmitted over the intra-domain and stored in the edge network in a Mobile/Multi-access Edge Network (MEC) platform to provide other ambulances with information of real-time road conditions.

Thirdly, SliceNet realises multi-level network slicing, as a unique innovation and achievement. At the cloud level, a network slice chains a selected set of VNFs, together with the required resources, across network segments for the required service. To assure the QoS guarantee at the data plane level, data plane programmability should be further explored to achieve either hardware-accelerated network slicing [3][4] or pure software-based network slicing [5]. Particularly, high-quality live video streaming needs to be assured for the Smart Ambulances in the rise of background competing traffic especially other video applications. Whilst working at home due to COVID-19 has had significant effect on growing media consumption, it is indisputable that critical services such as ambulances must be prioritized to assist in emergency response to COVID-19. As shown in Figure 1, a Smart Ambulance is streaming a live Ultra-High-Definition (UHD) video during an emergency service. Video streaming imposes a set of requirements with respect to the QoS of the network to ensure the streaming of smooth video. For instance, a latency greater than 100 milliseconds will produce lagging, buffering and the overall slowdown of the video service. Moreover, to avoid packet loss during transmission, adequate bandwidth, normally ranging from 20 and 40 Mbps, must be ensured for UHD video streaming. To tackle this, a 5G-aware video optimizer VNF [6] can be employed as part of the Smart Ambulance network slice to preserve the video quality even in a heavily loaded network.

The SliceNet network slicing achievement for eHealth use cases such as Smart Ambulance has featured EU 5G trials [7]. Figures 3 and 4 [8] empirically compares the quality without or with employing network slicing in the video streaming from the ambulance respectively, as perceived by the end user of the video e.g., the remote specialist. While the video is distorted in Figure 3 without network slicing, Figure 4 presents the advantage of deploying network slicing to preserve the high quality of the live video. Furthermore, each ambulance can have more than one network slice for various critical communication traffic according to the divergent QoS requirements. For instance, real-time video transmissions and vital imagery signs require enhanced mobile broadband communications whilst real-time road information demands ultra-low-latency high-reliability communications.

![Figure 3: Video Streaming without Network Slicing [8].](/sites/default/files/styles/400wide/public/images/2020-2020-11/Fig3.jpg?itok=cvjMQZ-h)

![Figure 4: Video Streaming with Network Slicing [8].](/sites/default/files/styles/400wide/public/images/2020-2020-11/Fig4.jpg?itok=3o1ZFXDt)

AI-Assisted Smart Ambulance Driving with Far-Distance Object Detection

Autonomous or AI-assisted semi-autonomous driving is highly desired for mission-critical mobile services such as the Smart Ambulance case, which require any errors from a human driver to be minimized for improved road safety. In addition, for safe operations on a Smart Ambulance, abrupt braking should be minimized too. Features such as human and vehicle detection, collision avoidance with automatic braking and real-time optimal pathfinding have improved the driving experience leading to enhanced vehicle safety, and reduction in accidents and the mortality rate. Hence, equipping the ambulances with a robust accurate object detector will enable situational awareness of the surrounding environment, and capabilities to predict, avoid and resolve possible collisions and accident risks without human intervention. An integrated AI core will also help maintain a constant speed by reducing sudden brake force, which produces great deceleration, and thus allows a swift but smooth ambulance ride and safe patient care.

Each Smart Ambulance will be equipped with an onboard Graphics Processor Unit (GPU) able to execute the AI model in real time. The GPU creates a favorable environment to obtain accuracy and speed trade-off in contrast to a situation where using an external server will cause further overhead to the network with different video streams being received by different ambulances at the same time. This significantly reduces the amount of computation needed to execute the algorithm by decreasing the latency and the network overhead. The road condition and the environment information will also be studied and assessed by the ambulance and the results will be sent (through a dedicated network slice) to the edge network located in the

correspondent domain, as illustrated in Figure 1 and stored in its local domain’s Mobile/Multi-access Edge Network (MEC) to provide useful information such as on-road obstacles and accidents for other Smart Ambulances in the area. This feature allows other Smart Ambulances in the same area to avoid compromised roads already detected by other ambulances.

The AI model used in the Smart Ambulance is a cost-effective, reliable object detector based on the latest Convolutional Neural Networks (CNN) technologies. A CNN-based UWS model is executed in real time on the embedded GPU in the ambulance with very high accuracy. It is based on the model employed in [9] for the purpose of finding missing people in Scotland wilderness [10] and has been tailored and executed in real time on the embedded GPU in the ambulance with very high accuracy. This algorithm takes advantage of many features that allow and improve recognizing objects at multiple scales (in terms of varying sizes of and distances to the object). This algorithm considers three main factors: the position of the object, the type of the object and the distance from the ambulance.

Various AI techniques such as Path Aggregation Network (PAN), dilated convolutions, and hyperparameter optimization have been integrated in the model to aggregate parameters of various backbone levels. These features have yielded significant improvement in detection of small-sized objects in performing low-pixel object detection which contributes to the recognition of objects from far distances. To achieve these results, the algorithm was trained with a comprehensive dataset tailored for this purpose. Different traffic objects and humans were categorized and manually labelled in various weather conditions, locations, and under different illuminations to cover various possible road conditions. Since both accuracy and speed are the two main aspects of the required performance for object detection, it is essential to ensure an optimal algorithm execution with high accuracy and high frame rate. Therefore, to operate securely on the road, the AI model must be performed in real time. Missing a frame could increase the chances of missing high-risk situations and could lead to increased risk of loss of ambulance control. Considering real-time detection with 30 Frames Per Second (FPS) and more, the model achieved an accuracy of 87.94% and a speed of 67 fps with the input size of 416 pixels in a prototype for this article, suitable for AI-assisted Smart Ambulances.

This capability of far-distance detection will allow the Smart Ambulance to take a proactive approach by slightly reducing the vehicle speed. This data is then sent and saved in an external server located in the edge network for other smart ambulances to receive and take immediate actions or change their routes accordingly in the case of emergency or accident as a cooperative driving solution. Figures 5 and 6 highlight the capability of such a Smart Ambulance in recognizing a pedestrian from afar and taking early action before pedestrians enter a pedestrian crossing. In Figure 5, a partially occluded human was detected by the ambulance (see A). Consequently, the ambulance reacted by braking gently to maintain the road safety as reflected in Figure 6 where the same human (see A) and another one (see B) are detected.

Conclusions

5G networks have enabled a myriad of new applications especially in improving emergency services. The quality of services in such emergency services needs to be guaranteed to fulfil the mission-critical requirements. Moreover, an early reaction from ambulance services in an appropriate and timely manner is imperative and critical to ensure a safe ride and a secure patient care. For that purpose, key enablers for a Smart Ambulance have been discussed. Firstly, network slicing as a key component of 5G network is employed to ensure the quality of service from the ambulance to the hospital and additional specialists, geographically distributed across different network service providers. Secondly, the Smart Ambulance has enabled AI-based features especially low-pixel object recognition in order to react against possible high-risk road conditions to maintain a constant speed for the paramedics to work properly and ensure secure patient care. These developments for Smart Ambulances expect to contribute widely to the emergency services in the near future to help and assist in emergency care especially during the global pandemic thanks to the recent advances in 5G networks, machine learning and video optimization technologies.

References

- EU 5G-PPP SliceNet project, https://selfnet-5g.eu/.

- Q. Wang, J. Alcaraz Calero, and M. Weiss, “End-to-end Cognitive Network Slicing: The SliceNet Framework for Slice Control, Management and Orchestration”, Special Issue on Network Slicing, in EURESCOM Message, winter 2018, pp. 9-10, https://www.eurescom.eu/fileadmin/documents/message/Eurescom-message-Winter-2018-Web.pdf.

- Q. Wang, J. M. Alcaraz Calero, R. Ricart-Sancheza, M. Barros Weiss, A. Gavras, N. Nikaein and et al., "Enable Advanced QoS-Aware Network Slicing in 5G Networks for Slice-Based Media Use Cases," in IEEE Transactions on Broadcasting, vol. 65, no. 2, pp. 444-453, June 2019, doi: http://dx.doi.org/10.1109/TBC.2019.2901402.

- R. Ricart-Sanchez, P. Malagon, A. Matencio-Escolar, J. M. Alcaraz Calero, and Q. Wang, “Towards Hardware-Accelerated QoS-aware 5G Network Slicing Based on Data Plane Programmability”, (Wiley) Transactions on Emerging Telecommunications Technologies, in press, Aug. 2019, doi: http://dx.doi.org/10.1002/ett.3726.

- A. Matencio Escolar, Q. Wang, and J. M. Alcaraz Calero, "SliceNetVSwitch: Definition, Design and Implementation of 5G Multi-tenant Network Slicing in Software Data Paths", in IEEE Transactions on Network and Service Management, in press, Sept. 2020, doi: https://doi.org/10.1109/TNSM.2020.3029653

- P. Salva-Garcia, J. M. Alcaraz Calero, Q. Wang, M. Arevalillo-Herráez and J. Bernal Bernabe, "Scalable Virtual Network Video-Optimizer for Adaptive Real-Time Video Transmission in 5G Networks," in IEEE Transactions on Network and Service Management, vol. 17, no. 2, pp. 1068-1081, June 2020, doi: http://dx.doi.org/10.1109/TNSM.2020.2978975.

- EU 5G PPP Trials Working Group, “The 5G PPP Infrastructure -Trials and Pilots Brochure”, Sept. 2019, https://5g-ppp.eu/wp- content/uploads/2019/09/5GInfraPPP_10TPs_Brochure_FINAL_low_singlepages.pdf.

- “eHealth 5G Ambulance”, Beyond5GHub. Accessed: Aug. 10, 2020, http://beyond5ghub.uws.ac.uk/index.php/ehealth-ambulance/.

- I. Martinez-Alpiste, P. Casaseca-de-la-Higuera, J. Alcaraz-Calero, C. Grecos, and Q. Wang, “Smartphone-Based Object Recognition with Embedded Machine Learning Intelligence for Unmanned Aerial Vehicle”, Journal of Field Robotics, in press, Nov. 2019, doi: http://dx.doi.org/10.1002/rob.21921.

- “Police to use AI recognition drones to help find the missing” BBC News Scotland, United Kingdom, Nov. 2019, https://www.bbc.co.uk/news/uk-scotland-50262650.

Additional Resource

IEEE ComSoc Best Reading list:

- IEEE ComSoc Best Readings in e-Health

- IEEE ComSoc Best Readings in Machine Learning in Communications

IEEE ComSoc Technical Committees and other projects:

Statements and opinions given in a work published by the IEEE or the IEEE Communications Society are the expressions of the author(s). Responsibility for the content of published articles rests upon the authors(s), not IEEE nor the IEEE Communications Society.